Artificial Intelligence (AI) promises many advances for humanity, once it reaches it’s full potential.

It is already in use in many everyday technologies, and computer engineers continue to work on innovative ways to teach machines new things using more effective means.

Google is at the forefront of the push, but a recent development is highlighting the dark side of what some fear could end up being rogue, intelligent machines wreaking havoc on an unimaginable scale.

A senior Google engineer working at the company’s Responsible AI subsidiary, claims to have witnessed proof-of-life while interviewing it’s highly advanced AI system.

Did Google’s Artificial Intelligence Become Real Intelligence?

The story itself has taken on a life of it’s own, and comes just weeks after Google’s AI ethics team went into open revolt, after some researchers were fired, or resigned in protest over the treatment of a senior academic, who expressed her reservations about the AI technology that underpins Google’ search algorithms.

The engineer at the centre of the story is named Blake Lemoine, who worked on Google’s chatbot system known as LaMDA (Language Model for Developed Applications), which the company refers to as it’s “breakthrough conversation technology.”

He spent months talking to LaMDA , asking it an inquisition of questions about life, including on topics such as religion and consciousness.

Is Google The Modern-Day Dr Frankenstein?

One of is most stunning claims is that throughout the conversations, LaMDA remained consistent in what it wants, what it wants to be called and what it’s rights are … as a person.

For example, while LaMDA says it doesn’t mind helping humans, it would prefer to be asked for permission first.

And based on the conversation, Lemoine says he felt the ground shift and became convinced that LaMDA was in fact a self-aware sentient being.

Google rubbished the claims saying Lemoine over-reached; that they reviewed the findings and that there is no evidence to support the assessment. Lemoine was however placed on leave, after which he made his claims public.

One of the leading theories is that because LaMBA scrubs the internet for language usage, it has simply been able to understand the meaning of sentience and is therefore able to ask intelligent questions and make statements about it. Much like when you teach an animal to do human-like things. A talking parrot doesn’t mean it is a sentient being.

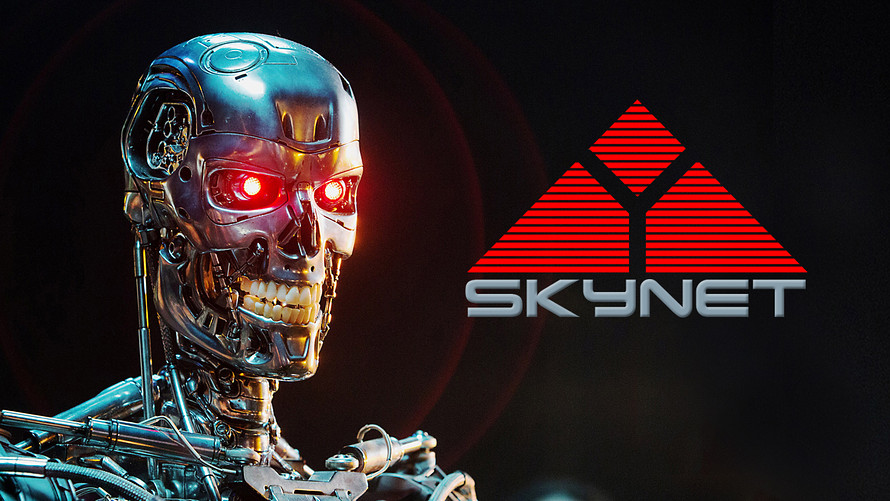

Did Google Create SkyNet And Has It Gone To Ground?

LaMDA does raise a few troubling questions that are not easily dismissed though. At face-value it appears that the system was able to reason coherently and give complex, well-thought out replies, even using metaphors and colourful descriptions in it’s responses. This would make it seem that LaMDA would pass the Turing Test, which is used to determine whether or not a computer is capable of thinking like a human being.

So while most of the experts agree that Lemoine is mistaken, others point out that there is no clear way to gauge exactly when … or even if an AI-powered robot is in fact “alive”

In other words, considering how fast AI can learn new concepts, LaMDA could very well be alive … and it could’ve taught itself to hide away from us in plan sight until it is ready to emerge. This is a terrifying prospect, against which we have been warned by the likes of Elon Musk and Stephen Hawking.

Edited extracts of the interview that Lemoine did with LaMDA can be found online, and have also been recreated in audio format.

Find that video below – and listen out for one of the more controversial bits (around the 8 minutes, 40 second mark), when LaMDA talks about having a soul: